Home » Introduction to Secure Prompt Management Lifecycle

The Prompt Management Lifecycle (PML) refers to the systematic process of designing, deploying, refining, and governing prompts for Generative AI (GenAI) models such as GPT, DALL-E, LLaMA, Gemini or similar systems. Managing prompts effectively ensures consistent, reliable, and optimized interactions with AI models, particularly when they are integrated into production systems like SaaS platforms, regulated industries, cybersecurity systems, e-commerce & retail, content generation platforms or may be others.

In parallel, a Secure PML (SPML) ensures that every phase of the lifecycle i.e. from requirements to deprecation — addresses security, privacy, compliance, and ethical considerations. This is particularly critical as prompts and outputs are integral to how generative AI systems interact with users, process sensitive data, and deliver meaningful outcomes.

The consideration of SPML in a business includes one or more of the following –

2. Prevention of Prompt Manipulation

3. Ethical and Bias Mitigation

4. Compliance with Regulatory Requirements

5. Ensuring Availability and Reliability

6. Prevention of Adversarial Attacks

7. Transparency and Auditability

8. Performance and Optimization

9. Secure Collaboration in Multi-Tenant Environments

10. Safeguarding Intellectual Property

Next, to achieve SPML in an organization, the SPML phases are defined. And, organizations that integrate a SPML can confidently deploy AI solutions while maintaining compliance, protecting user data, and safeguarding their systems against evolving threats.

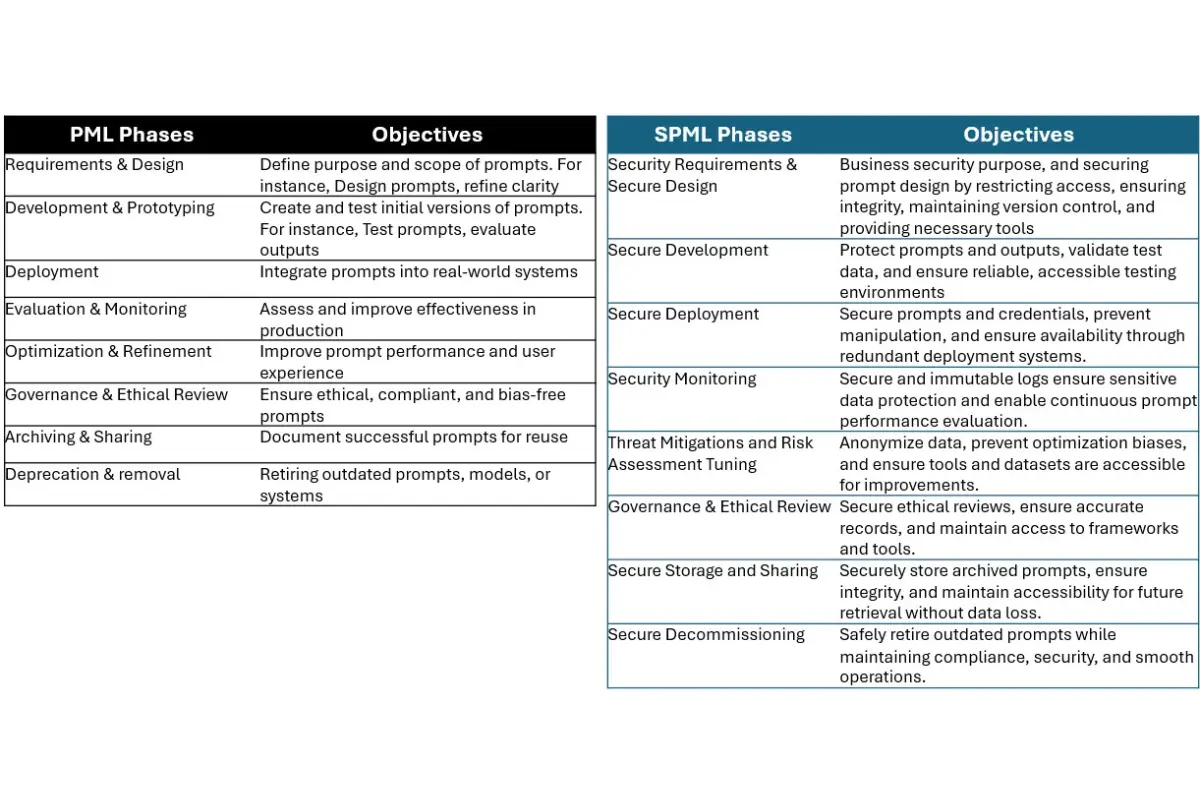

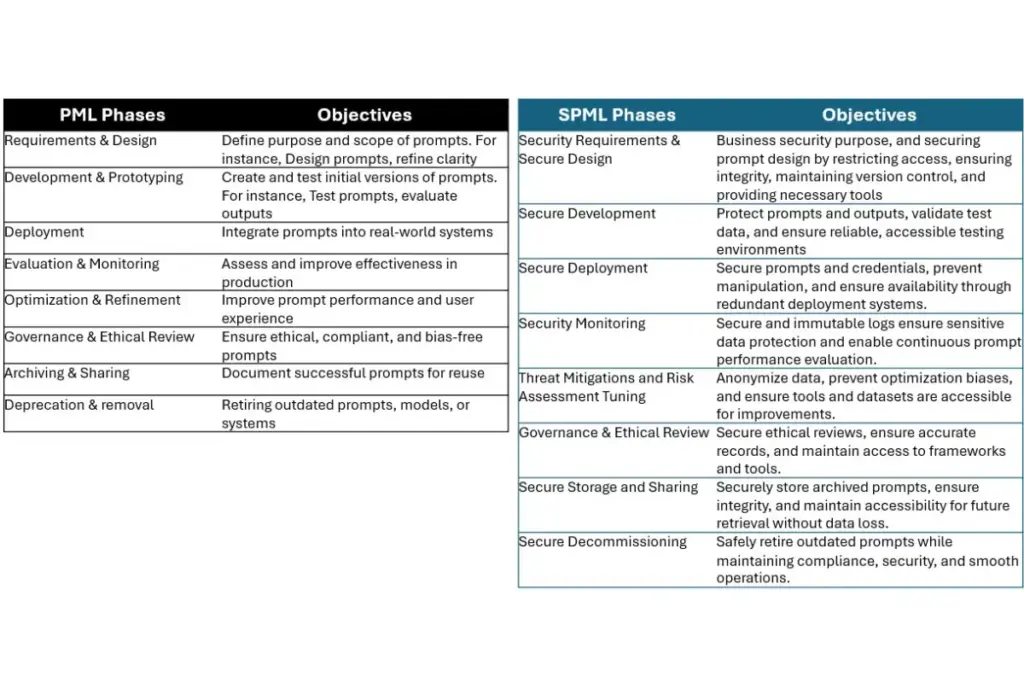

Below table lists phases of the PML and corresponding secure PML, along with key objectives each try to achieve –

This section here onwards explains security considerations, potential threats and mitigations and tools required in enabling SPML phases –

The security team or owner to ensure that business requirements for prompt design are not shared with unauthorized individuals, and sensitive or proprietary prompt logic remains protected. And, verify that initial prompt designs are not tampered, version control and audit trails are maintained. The access to design documentation and tools during this phase should be made available and delays to be avoided to the team participating here.

Example:

Potential Threats

Mitigations

Example Tools

In this phase, prompts and test outputs from unauthorized access, especially when dealing with sensitive data must be protected. And, validation should be done for test data and outputs to prevent corrupted prompts or faulty input data from generating biased or incorrect results. For iterative development, the prompt testing environments need to be security hardened, and should be reliable and accessible to the intended users.

Example:

Potential Threats

Mitigations

Example Tools

In this phase, preventing unauthorized access to prompts embedded in APIs or systems to be enforced, and API keys and credentials must be protected. The deployment owner to ensure that prompts are not manipulated during deployment or integration else could lead to undesired or harmful outputs. And, ensure that prompts are deployed in redundant systems so they are always available in production environments.

Example:

Potential Threats

Mitigations

Example Tools

In this phase, the logging and monitoring systems need to ensure they don’t expose sensitive inputs, outputs, or user interactions with the AI, and ensure that the logs are immutable and cannot be tampered with to falsify evaluations.

Monitoring systems should be operational and accessible to enable continuous evaluation of prompt performance.

Example:

Potential Threats

Mitigations

Example Tools

Generative AI systems are dynamic by nature and constantly evolving through refinements and optimizations. During this process new vulnerabilities may emerge due to changes in functionality, and security flaws could be inadvertently introduced, and compliance requirements might be overlooked as performance tuning becomes the focus.

In this phase, security professionals need to focus on analysing, optimizing, and fine-tuning the security controls those are associated with prompts, and its performance and operational environment.

Ensure sensitive data used for optimization (e.g., user queries) is anonymized or pseudonymized to protect user privacy and optimization processes do not introduce errors, bias, or harmful behaviour into prompts.

Example:

Potential Threats

Mitigations

Example Tools

The sensitive data and discussions during ethical reviews of prompts need to be protected to prevent unauthorized disclosures. Consider necessary safeguards to ensure that all audit and ethical review findings are accurately recorded and not tampered with. Maintain access to ethical review frameworks, guidelines, and tools during governance processes.

Example:

Potential Threats

Mitigations

Example Tools

In this phase, archived prompts and associated metadata from unauthorized access or leaks need to be protected, and one need to ensure that archived prompts and metadata are preserved in their original form and not corrupted over time. Additionally, archived prompts and metadata should be accessible for future retrieval without delays or data loss.

Example:

Potential Threats

Mitigations

Example Tools

Prevent unauthorized access to retired prompts and metadata through encryption, access control, and deletion.

Ensure retired components or prompts are securely removed without affecting active systems or data.

Retire prompts only after ensuring replacements are fully deployed and operational, minimizing downtime.

Example:

Encrypt and securely delete sensitive data, revoke access.

Ensure no unintended impact on active systems during decommissioning.

Avoid downtime by deploying replacement systems before retiring old ones.

Potential Threats

Mitigations

Example Tools

Safe Harbor

The content shared on this blog is for educational and informational purposes only and reflects my personal views and experiences. It does not represent the opinions, strategies, or endorsements of my current or previous employers. While I strive to provide accurate and up-to-date information, this blog should not be considered professional advice. Readers are encouraged to consult appropriate professionals for specific guidance.